Impact and value through AI technology

AI products that work for you and with you

Improve your AI product's

quality

transparency

explainability

compliance

adoption

value

trustworthiness

fairness

quality

January 14, 2022

My recent blog was about the acknowledging the difference in perspectives between the intended end users of your data product and the data team. Today I want to discuss how to take this realization and use it to improve the quality, trustworthiness, impact, and adoption of your data products.

For wouldn’t it be amazing if users and stakeholders felt that the data products you develop are game changers? Yes, yes it would. Now let’s make sure they do.

The thing about use and adoptions is*: you can present all the ROC curves and test set performance you like, but if the first couple of predictions a model makes happen to be incorrect, there is a risk that users will not give it another chance to prove itself and recover. As mentioned in part 1: trust is essential for adoption. If you want to persuade your users to drop their trusted rule-based solution, you need to convince them and earn their trust. The basic business rules that were previously in place may not have been great, but the business knew in which cases it worked well. And when it didn’t, they were able to intervene accordingly. If all is well, your models will have an operational impact. And if the business doesn’t trust those models, they won’t be used. Simple as that.

There are many scientific studies and models that describe technology acceptance, and even though scholars aren’t fully agreed on the details, the research shows that there are a few important factors that determine whether a product is adopted by the user:

On top of that, with each experience the user has with your product, the user's understanding of perceived value, perceived ease of use, trust, and perceived ease of adoption changes. For better or worse.

So, to create value and impact with data products, you need to understand your user, their context, their operations, their objectives, their concerns.

It may seem obvious and straightforward to ensure alignment on the goal of the AI product: the business wants optimized planning, more accurate forecasts, higher conversion rates. Don't confuse understanding the objective for understanding your user.

User understanding requires more than just great model performance. It also requires good collaboration and understanding between business and data teams.

For example: Your model performs well on a majority of the articles / segments / regions currently in stock, but then a new product is launching this quarter. Now you are presented with a cold start problem, as your model is making predictions with no historical data. Your model was working fine, but if you are working within a business that changes all the time, you will need to take that into account in the design of your system for it to be a solid solution for the business department.

A lot of attention often goes to enhancing business teams so they reach a sufficient understanding of how systems work: what are the inherent strengths and weaknesses of ML models, how to handle edge cases, what features are used?

But there is another side to this coin: data teams should sufficiently understand how the data product will be used. Because achieving that understanding, allows them to make good design decisions. Good design decisions that help their user understand the product, so they can actually work with the product to achieve their objectives. So you should ask your users things like:

Let’s take a step back and ask ourselves: "How does the user perceive our data product?"

Norman, D. (2013). The design of everyday things: Revised and expanded edition. Basic books.

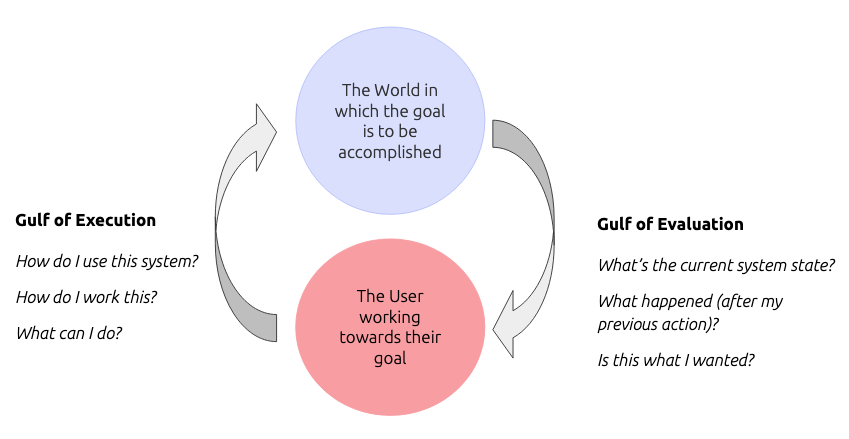

Following Don Norman***, let's look at a user trying to accomplish some goal in the outside world, by performing actions. This is what Don Norman, in his book, the design of everyday things, refers to as the Gulf of Execution. The user is trying to figure out how to use the product, how to interact with it, and what the user can do with it. After trying out some action with the product, the user will then observe the effect. Don Norman refers to this as the Gulf of Evaluation. The user asks themselves: what happened after my input? Do I obtain the expected or desired result? And from there, the user may plan a different action, try out something else, or decide that your product is in fact not helping them at all and they refrain from using it.

**** Brajdic, A. (2019) Understanding mental and conceptual models in product design from Safety Culture, on UX Design.cc.

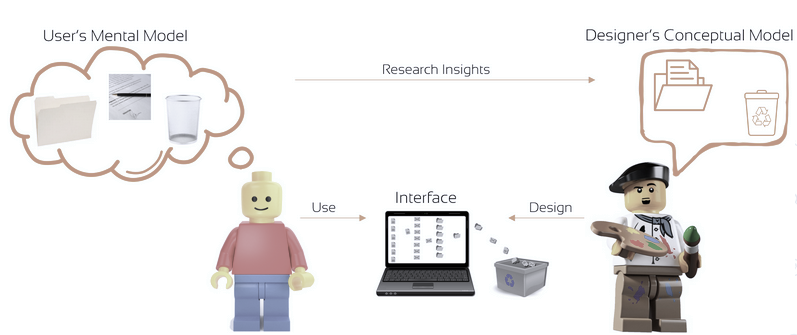

When providing the user with some tool to use throughout their activity, the user will try to establish a mental model of how the tool works or behaves so it can effectively be used to accomplish their goal.

A mental model, simply put, consists of causal rules such as: “If I perform action P, the effect will be Q”. Based on this mental model, the user tries to navigate the system, to use it in accomplishing their goal.

The biggest challenge in design is to build a product that can be understood by its users in the context of their task, so they can effectively use it. If we understand how the intended user thinks, in what state of mind they are when they use the AI product, what they are trying to achieve, and what their prior knowledge or skill set is, we can create a design that is intuitive for them to use. We can create the design so that it looks familiar to them, like you see in this example, where the icons resemble real world objects. This replication of familiar things ensures that users recognize the things they need or want to achieve.

The thing is that, if you don’t know your user all that well, you may have certain assumptions about their knowledge or interpretation or mindset. If you do not check such assumptions with your users, your assumptions may be wrong. And if you are wrong, your users may not be able to build the required mental models to navigate and use your product effectively. Not only could this lead to various kinds of user errors, and all the damage caused by said errors. It may also cause your user group to refrain from adopting your product. Because, if the system is not producing value for your user, because, for example, it is not easy to use, or to implement, or cannot be trusted, your users will not adopt it.

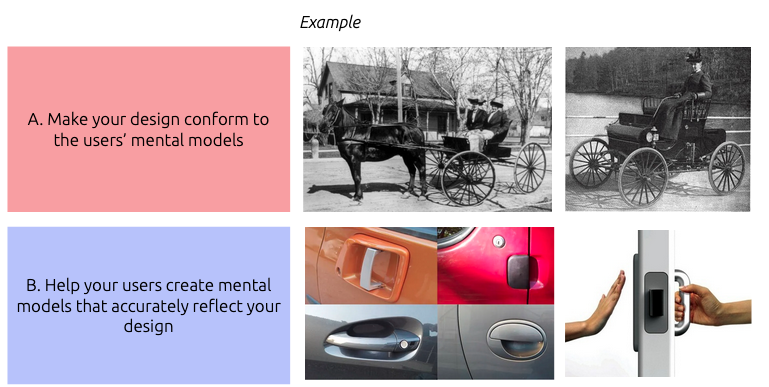

So, how do we design products that are easy to use and understand? Well we can do either of the following.

1) We can make the system conform to users' mental models (assuming most mental models of your user base are similar).

2) We help our users create mental models that accurately reflect our design. You can do this by, for example, explaining things better, or letting the design signal to the user how things are to be used. You can add a tutorial, documentation, and offer workshops. Of course, any solution you think of should be evaluated for their effectiveness in actually helping your user build the correct mental model.

Having an accurate mental model of a system often implies a certain level of knowledge and skill. But knowledge and skill aren’t things we were born with - we acquire them. And with new knowledge and skill our perspective changes.

For instance, car mechanics will look at a car differently than a person who has no clue about the internal workings of a car. Knowing what to look for, or listen for, changes our attention and perspective.

And so, if we design a product for a user group, and we make that product really complex or unfamiliar for our target users, even when it is clear that our user group does not possess the knowledge and skill set to understand that complexity, then we must either make it simpler, or we must help them develop the skills and knowledge needed to use our product.

If users don’t understand our product, then we're to blame. We have either failed to explain it well enough, or we have failed to know our user well enough.

Today's blog was about the basics of human-centered design. My next, and final blog in this series, will focus on the design process: How can we talk to and interact with our users to find out what they know, what they need, and what their limitations are?

* Inspired by Data Science as a Product. Going from MVP to production. | by Tad Slaff from Picnic

** Inspired by Kaasinen, E. (2005). User acceptance of mobile services: Value, ease of use, trust and ease of adoption.

*** Norman, D. (2013). The design of everyday things: Revised and expanded edition. Basic books.

**** Brajdic, A. (2019) Understanding mental and conceptual models in product design from Safety Culture, on UX Design.cc.